They're all TOOLS and I WANT Them to Talk Behind My Back

For many years, I had a ritual every time I flew to China or Hong Kong. I’d leave with an extra empty suitcase, knowing it would return home stuffed with books.

- In Beijing, I’d head straight to 北京语言大学 (Beiyu) bookstore, once the famous Beijing Language Institute.

- In Shanghai, it was 書城.

- In Hong Kong, the maze of Mong Kok bookshops, where shelves groaned under new and second-hand academic treasures.

Some of the best phonetic models and linguistic analyses for everything from Urdu and Farsi to Burmese, Russian, and obscure Chinese dialects were written in Chinese. I’d pore over tables of tones, consonant charts, folded-out maps bigger than the book itself, and find patterns across authors. At home, I’d spread these texts across my desk, cross-referencing, swapping datasets, trying to force the static pages into a dynamic conversation with one another.

It was exhilarating. But it was also deeply impractical.

From Flash to Frustration

When I first put my Cracking Thai Fundamentals interactive programme online in 2013, I used Articulate Storyline. At the time it was state-of-the-art: audio, video, greenscreens, clickable hotspots. And the engine was Flash.

That worked—until it didn’t. Steve Jobs buried Flash, browsers pulled the plug, and my once-gleaming course limped along. Media broke. Greenscreen renders showed ugly black boxes. The fallback HTML5 version was clunky and uninspired.

The bigger problem? None of my tools could talk to each other. Quizzes were hard-coded. Learners gamed the system. The “wow” factor vanished.

I was left emptying water from a boat with a hole in the bottom.

Fast-Forward: Why I Built the ToolBus

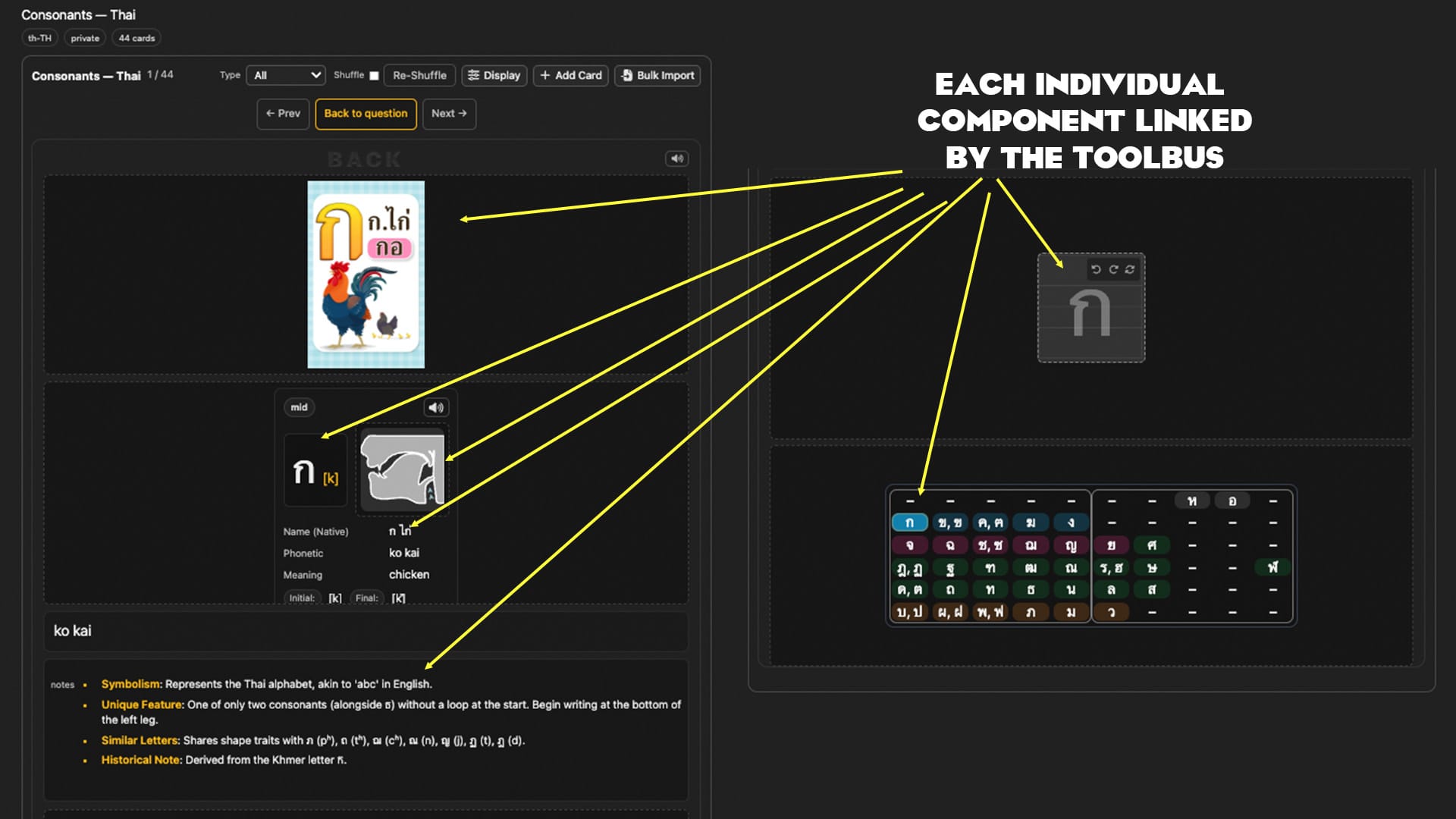

As I started building CLF with today’s tech, I didn’t just want standalone tools. I wanted them to breathe. To talk. To constantly take in fresh data, generate fresh challenges, and—critically—to talk behind the learner’s back.

So I built the ToolBus. Think of it like a subway system for data: every tool gets a ticket to ride.

- You generate a dialogue? The Tone Contours tool listens in, analyses the tones, renders graphs, and pipes the audio back instantly.

- Click on a word? The Mouth Map lights up, showing articulation.

- Curious how that Thai word links to your Chinese background? A comparative tool spins up and overlays the Sino-lexicon.

- Practising a kanji? Stroke order animates in real-time, with handwriting recognition checking if you got it right.

All of this happens without you wiring each connection by hand. The ToolBus is the network; the tools are the stations. Once you plug in, the trains run themselves.

A Snapshot of Some of My Tools (So Far)

Some of the tools I’ve built (and wired into the ToolBus):

- Consonant Compass — see Indic abugida maps link to Thai, Chinese, Korean, even Tolkien’s Tengwar.

- Vowel Compass — compare vowels across Indic, Chinese, and Korean systems, mapped to the ancient “map of the mouth.”

- Tone Master & Tone Assistant — practice tones across dialects, compare Ubon vs. Hanoi vs. Saigon.

- Word Spacer — break apart Thai, Burmese, Arabic, Chinese, Japanese text into readable chunks.

- Pitch Accent Analyser (Japanese) — sentences with pitch graphs, audio, romaji, kana, translations.

- Thai Sentence + TTS Tool — instantly map tones in Thai sentences, hear them spoken in multiple voices, and see tone contours live.

- Hanzi/Kanji Stroke Animator — trace characters with live stroke recognition.

- Abacus Interactive — traditional suanpan and soroban for maths fluency.

- News Tutor — paste Thai news articles, get levelled summaries for kids, teens, adults.

- Handwriting Grid — simulate old-school Thai/Chinese/Japanese copybooks.

Here’s the kicker: these aren’t just for foreigners. They’re just as powerful for native-speaking kids.

Instead of rote repetition or dull drills, Thai children can see how tones work in their own language. Chinese learners can practise stroke order with live feedback, not just copybooks. Japanese students can watch pitch accent unfold in real-time. These tools replace outdated rote methods with interactive, visual, and adaptive systems that make sense for everyone.

Each tool is powerful alone. Together on the ToolBus, they become exponential.

Why This Matters for Any Educator, Teacher, Coach, Training Department or Anything in Between

This isn’t just about linguistics. Language is my proof of concept.

But imagine:

- A business coach builds a negotiation simulator.

- An HR team wires performance tools into onboarding.

- A sports coach plugs biomechanics data into training drills.

As long as their tool follows the schema (props in, props out), it can join the ToolBus. Technically, all a content expert needs is:

- Build (or have built) a Svelte/React tool.

- Register it with the system.

- Host it (GitHub, server, wherever).

From there, their expertise runs live in the learner’s arena. Their tools talk. Their data integrates. Their impact multiplies.

This isn’t about replacing teachers. It’s about giving them steroids. Suddenly, the wisdom you’ve built over decades isn’t locked in a textbook or your head. It’s living, linked, interactive, and amplifiable.

Message to Educators and Experts

We need a mindset shift.

Stop thinking of “learning resources” as static PDFs, quizzes, or slides. Start thinking in tools, data, and conversations.

- Use human expertise to generate data and systems.

- Use tech to keep it alive, linked, and adaptive.

- Keep AI on a leash: conductor, not overlord.

The renaissance we need is not more AI for AI’s sake. It’s human expertise, wired smartly, talking behind the learner’s back. That’s what makes education feel alive again.

Next time: I’ll open the lid on one of these tools and show you exactly how the ToolBus lets it work smarter, not harder.